10Duke is a software company based in London and Helsinki. Over the past 5 years they have been developing web, mobile and desktop applications for some of the biggest brands and companies across the world. The key to their success has been their technology platform which enables them to develop stable and scalable applications faster than others.

10Duke is a software company based in London and Helsinki. Over the past 5 years they have been developing web, mobile and desktop applications for some of the biggest brands and companies across the world. The key to their success has been their technology platform which enables them to develop stable and scalable applications faster than others.

Last month, 10Duke released their technology to the Java developer community with the 10Duke SDK for Social Media, a software development kit specifically for Java developers that features extensive libraries for creating Java applications and include almost 1000 classes of Java code. The 10Duke SDK has been used in more than 40 enterprise production deployment worldwide.

Their SDK helps Java developers to develop applications that can: a) leverage cloud services, such as AWS, b) utilize a variety of application servers (Tomcat, GlassFish, JBoss, etc), c) run on either Linux, MS or a mixed environment and d) use a variety of databases, (MySQL, PostreSQLT, local database, etc) and much more.

To show how their technology can help start-ups who want to get to market quickly, or enterprise organisations that need stability and scalability, here are a few examples:

Arsenal FC

10Duke partnered with Arsenal to bring a social community to the English Premier League club. Arsenal chose the10Duke SDK to create a fun online destination for their premium members which included online video clips, text, forums, photo uploads and much more. Because the application was only available to some members, 10Duke integrated the application with the Arsenal’s TicketMaster database for user validation. The application specification, build, testing, integration and deployment took just 5 weeks using the 10Duke SDK.

Masher

Masher

Masher is a spin out of BBC Worldwide. The application allows users to create videos by mixing together video, audio and images and adding effects and stock footage. 10Duke was asked to redevelop Masher in order to improve the stability, scalability and reduce the cost of running the application. 10Duke, using its SDK, rebuilt the app in just 9 weeks, at considerably less cost than the original build. The application has since been added to Facebook and several other social networks and is available in multiple languages.

Tekla BIMsight

Tekla BIMsight

Tekla, a large Finnish software company working in the construction industry needed an online distribution site and help centre for their new building information management software package. 10Duke created the distribution site and integrated with Tekla’s existing CRM system. The help centre featured a forum and video which 10Duke built online and integrated with the BIMsight software product enabling the users to access the help centre instantly. It took 8 weeks to build and integrate with Tekla BIMsight and the CRM system. The application features enterprise class analytics and 10Duke continue to work with Tekla to enhance their products and services. Tekla BIMsight recently won the BATIMAT 2011 Gold award for IT innovation.

Try it! Anhyone can download the 10Duke SDK free for 30 days.

Once again, I went to Codebits. Every year I write how wonderful this event is. As described on the Codebits site we had: 3 days. 24 hours a day. 800 attendees. Talks. Workshops. Lots of food and beverages. 48 hour programming/hacking competition. Quiz Show. Presentation Karaoke. Security Competition. Lots of gaming consoles. LEGO. More food. More beverages. More coding. Sleeping areas. More fun. An unforgettable experience. On Thursday morning, you walk in as a normal person, on Saturday

Once again, I went to Codebits. Every year I write how wonderful this event is. As described on the Codebits site we had: 3 days. 24 hours a day. 800 attendees. Talks. Workshops. Lots of food and beverages. 48 hour programming/hacking competition. Quiz Show. Presentation Karaoke. Security Competition. Lots of gaming consoles. LEGO. More food. More beverages. More coding. Sleeping areas. More fun. An unforgettable experience. On Thursday morning, you walk in as a normal person, on Saturday  evening, you crawl out – on your knees, entirely finished, but with that warm feeling that you have achieved something and have been part of a huge community that hacks for fun and work. What is new this year: more people and more wacky ideas for fun ie badges etc. There is always something going on. Unfortunately being stuck behind a huge table of books, I can see only a little part of it.

evening, you crawl out – on your knees, entirely finished, but with that warm feeling that you have achieved something and have been part of a huge community that hacks for fun and work. What is new this year: more people and more wacky ideas for fun ie badges etc. There is always something going on. Unfortunately being stuck behind a huge table of books, I can see only a little part of it.

What did I see/do:

- Some good presentations since my table was near Stage A where Chris Anderson, co-author of CouchDB: The Definitive Guide , gave the following talk: Couchbase Sync can save the world!

- The effects of the Nuclear Tacos – apparently they are awful. After eating one, the first 10 mn, you think you are going to die, then you feel awful because you

know you are not going to die. As the queue to the restaurant was near me, I have seen some white, red and sickly faces – not a pleasant sight. See the Nuclear Tacos Sensor Helmet Gameshow @Codebits 2011 developed by altlab Lisbon’s Hackerspace as part of the hacking competition.

know you are not going to die. As the queue to the restaurant was near me, I have seen some white, red and sickly faces – not a pleasant sight. See the Nuclear Tacos Sensor Helmet Gameshow @Codebits 2011 developed by altlab Lisbon’s Hackerspace as part of the hacking competition.

- I was given a pleasant drink – I believe whoever made it played with the molecules of the ingredients – no killing field this time.

- Conga – wild music would start and the staff would do a Conga in the main room.

- Skateboards – I believe there was a kind of a contest for daredevil runs.

- Even saw a plastic air gun toy.

I am glad SAPO, organize these types of recreation to keep everybody going – wake up calls that are very needed – see pictures.

I forgot to mention how much I like Lisbon and the joy of the walk from the hotel to the venue particularly on Saturday morning – it is fresh, calm and beautiful.

I hope one of the organizers will be able to tell you more about Codebits V in the very near future.

As described before, OpenFest is the annual gathering of fans, creators and supporters of open source in Sofia.

As described before, OpenFest is the annual gathering of fans, creators and supporters of open source in Sofia.

Unfortunately I had even more problems understanding the programme than usual. Cyrillic and I do not get on – so I will only tell you about the talks that kind of made sense to me. Michael Kerrisk’s talk seemed interesting: Why Kernel Space Sucks? (Or: Deconstructing two myths)(Or: Anabridged history of kernel-userspace interface blunders…). Philip Paeps (one of the Fosdem organiser among other things) gave a talk on Using FreeBSD in an Embedded Environment. I noticed that like everywhere else people here are concerned about the environment – Using Open Source Technologies to Create Enterprise Level Cloud System, Optimize your Costs and Offset your Carbon Footprint on the Environment by Венелин Горнишки, Илиян Стоянов.

Unfortunately I had even more problems understanding the programme than usual. Cyrillic and I do not get on – so I will only tell you about the talks that kind of made sense to me. Michael Kerrisk’s talk seemed interesting: Why Kernel Space Sucks? (Or: Deconstructing two myths)(Or: Anabridged history of kernel-userspace interface blunders…). Philip Paeps (one of the Fosdem organiser among other things) gave a talk on Using FreeBSD in an Embedded Environment. I noticed that like everywhere else people here are concerned about the environment – Using Open Source Technologies to Create Enterprise Level Cloud System, Optimize your Costs and Offset your Carbon Footprint on the Environment by Венелин Горнишки, Илиян Стоянов.

After the event, we all meet at the Krivoto restaurant for beer and food, networking and discuss the talks of the day. You can guess what I did… tried to decipher the English menu.

After the event, we all meet at the Krivoto restaurant for beer and food, networking and discuss the talks of the day. You can guess what I did… tried to decipher the English menu.

Two days after Openfest, it’s Codebits time in Lisbon so instead of traveling home one day and flying to Lisbon the nest day, I decided to stay an extra day in Bulgaria thanks to my hosts Marian Marinov and his wife Toni. By the way Marian is the main organizer of OpenFest. We spent the day in Plovdiv, a charming city about 130 km East of Sofia with Roman ruins, typical Bulgarian architecture and much more. One should not forget that Bulgaria came under Ottoman rule for nearly five centuries which explains the presence of so many Mosques. More….

Two days after Openfest, it’s Codebits time in Lisbon so instead of traveling home one day and flying to Lisbon the nest day, I decided to stay an extra day in Bulgaria thanks to my hosts Marian Marinov and his wife Toni. By the way Marian is the main organizer of OpenFest. We spent the day in Plovdiv, a charming city about 130 km East of Sofia with Roman ruins, typical Bulgarian architecture and much more. One should not forget that Bulgaria came under Ottoman rule for nearly five centuries which explains the presence of so many Mosques. More….

After Sofia – Lisbon for Codebits.

I arrived a little too early at the venue but I remembered that the out of hours entrance was in the basement – the security guards were very kind and gave me a chair so I could wait in confort. Who said that life at conferences is not charmed!

Now in Sofia for Openfest. I am always so happily surprised by the warm welcome I get there. Bulgaria might be thought of as a poor struggling country of the EU but it has a very thriving techie community which has developed some great open source project. One of them being ELWIX.

Now in Sofia for Openfest. I am always so happily surprised by the warm welcome I get there. Bulgaria might be thought of as a poor struggling country of the EU but it has a very thriving techie community which has developed some great open source project. One of them being ELWIX.

ELWIX – Embedded multi-architecture lightweight unix platform for special devices was created by Michael Pounov. Michael’s objective was to make a rock-solid, fast, secure, easy changeable and highly portable OS. This OS can be used for:

. Wireless access points and appliances

. Router and switch devices

. Security surveillance systems

. Access control systems and many various embedded appliances.

ELWICK is free to download it and upload into your own devices. The source code can be used or modified as per the BSD license. To get support please contact Michael.

Damien Sandras, was one of the key organizers/founders of Fosdem, and is well known for his project Ekiga which started as the GnomeMeeting.

Damien Sandras, was one of the key organizers/founders of Fosdem, and is well known for his project Ekiga which started as the GnomeMeeting.

What is Ekiga? Can you briefly tell us what are the main features?

Ekiga did not start as a simple instant messaging software but as GnomeMeeting – a Linux alternative to Netmeeting. One of the purposes of Netmeeting was video chatting between people. What was less noticed is that it was compatible with the H.323 standar and thus interoperable with professional videoconferencing environments. Ekiga is still the only client available on GNU/Linux software and able to communicate with H.323 hardware.

Later, when it was renamed, Ekiga evolved into a SIP client. SIP is now the leading VoIP and IP Telephony protocol.

I would say that the primary intent of Ekiga is to be a softphone, ie software you can use instead of a real IP Phone. Nowadays, most enterprise PBX systems are IP systems. Some of them support the standard SIP protocol and are used with hardware based IP Phones. Ekiga aims to offer all the features such an IP Phone can offer:

- Call Pickup

- Call Hold

- Call Transfer

- Call Diversion

- Busy Lamp Fields (through the contacts list)

- and many more

All of this with the most powerful codecs available today: G.722 for Wideband audio (also named HD Voice) and H.264 for video.

When combined with such an IPBX, Ekiga can display pickup notifications for calls intended to your colleagues, presence information and allows audio and video calls. It can be compared with software like what CounterPath is producing. But of course, Ekiga is a free alternative (as in Free Beer).

To summarize, most probably IM and simple video-chatting is only one very limited subset of what Ekiga can offer.

I believe you started the project late 90s/early 2000. How did it all start?

Back in 2000, I was still student in Belgium at the “Université Catholique de Louvain”, studying to become a computing science engineer (polytechnics). As all students, we had a project to achieve in order to graduate. As I was already passionated by Linux, I wanted to contribute something back to the community. I started identifying missing tools in the Unix environments together with Marc Lobelle. Marc was the professor responsible to coach me in my work and to judge it. We had the idea of creating a Netmeeting clone and this is how GnomeMeeting was born.

The project went well, and we decided to release it under the GPL license. It was first released on the 2nd of July 2001. GnomeMeeting 1.00 was released 2 years later, then converted into Ekiga a few years ago

For over a decade, I have worked on it in my spare time..

In July 2001, you had a record number of visitors on the site – 11000 in one week. How did that translate into number of users at the time? And what is the market share of Ekiga now ?

Unfortunately, I have no idea about this. Ekiga was the default VoIP client in many Linux distributions. A percentage of them are using it together with the Ekiga.net platform which provides free SIP addresses, but most users are using it with PBX systems or videoconferencing hardware I think.

Unfortunately, I have no idea about this. Ekiga was the default VoIP client in many Linux distributions. A percentage of them are using it together with the Ekiga.net platform which provides free SIP addresses, but most users are using it with PBX systems or videoconferencing hardware I think.

However, pure IP Telephony softphones like Ekiga are probably still a niche market, especially on Linux. And I guess that people who want simple videochatting have many Windows compatible alternatives available now like Gmail and friends.

We have no firm idea whether Ekiga is used as yet another videochatting software, or as a VoIP solution either for cheap calls or together with an IPBX.

You changed the name from GnomeMeeting to Ekiga – any particular reasons?

In 2006, I had spent much spare time working on SIP support for GnomeMeeting. However, most users were still thinking that GnomeMeeting was GNOME software and a replacement for Netmeeting. In 2006, on Windows platforms, Netmeeting was dead.

In 2006, I had spent much spare time working on SIP support for GnomeMeeting. However, most users were still thinking that GnomeMeeting was GNOME software and a replacement for Netmeeting. In 2006, on Windows platforms, Netmeeting was dead.

We then decided to change the name for something which was not referring to Netmeeting, or GNOME, or chatting, or telephony, or video because it would limit the software to only one use (and there are so many possible uses…).

While browsing the web for a new name, I found out that the Ekiga was an old African technique to communicate from village to village without tam-tams. The technique consists in reproducing notes, without words, emitting the syllable “ke” in a falsetto voice, and repeating it with the corresponding tones.

In one of your old posts you said that “The main problem [with Skype] is not that the program is not Open Source, the problem is that Skype is locking users into a proprietary protocol.” Seven years later, Skype is under the Microsoft umbrella – how do you see its future?

I think that standards always win the battle. It is just a matter of time!

The main (dis)advantage of Skype is that it easily goes through firewalls and NAT gateways, unlike standard protocols like SIP.many firewalls aren’t SIP aware, which might be it’s own advantage.

On the other hand, Microsoft is investing lots of efforts in Microsoft Lync, its Unified Communications software, which is based on SIP. With Microsoft adopting Jabber just like facebook did, I would not be surprised to see Skype being converted into a SIP client.

What are the other similar projects?

SIP communicator (renamed into jitsi) and Linphone are good alternatives to Ekiga. But I think we have more features. Jitsi seems to be developed with instant messaging in mind. Linphone probably more like a softphone, but it lacks a contact list with presence support. Empathy has some SIP support, but it is also very limited.

Which you should use depends on your needs. If you need instant messaging software to be able to communicate with your friends and family on Linux machines, all software mentioned in this article will do the trick. If you have to use it with your SIP compatible company PBX, then I think Ekiga is the best choice. I am thinking to corporate users using their softphone to place and receive calls instead of their phone. Or to users working remotely through a VPN and who need to be reachable by all means.

Who are the users of Ekiga? As you are involved with Fosdem, give talks at Guadec, RMLL etc, it feels that Ekiga is not just an open source project but aimed at open source users?

When I released the project for the first time, I was not sure if I would keep working on it. But Open Source users were so enthusiast about it that I continued releasing new versions. They were and still are my main motivation…

Why is Ekiga no longer the internet telephony application by default in Ubuntu?

You can not place all software on the first CD of the distribution. You have to make choices. Ubuntu is a user oriented distribution, it is not targeted at companies. Empathy is in that case the most rational choice.

If you see SIP as a way to place calls with audio and video, then Empathy is clearly the best tool because it offers more than only the SIP protocol and it is well integrated to the GNOME desktop.

I think that one part of the problem is that not all people at Ubuntu understand the difference between chatting and professional IP Telephony/Videoconferencing.

However, from my point of view, it is probably a bad choice due to some misunderstanding. Indeed, Empathy and Ekiga are two completely different software with different goals. Simply telling “we do not need Ekiga because we have Empathy” is like telling “let’s remove vim because we have LibreOffice”.

On which platforms can it be used? Unix, Windows, Mac etc.?

Ekiga was coded in a portable way to be usable on all platforms. That is why a Windows port exists and a Mac port did exist in the past.

However, even if the Windows port is still maintained, it is not as stable as the original Linux version. The reason is that we lack manpower for other platforms. Any volunteers ?

People think that Ekiga should be installed by default on Android. Have you got plans for that?

I also think it would be a good idea. Smartphones and tablets are a huge market and probably the most natural evolution of the desktop.

I also think it would be a good idea. Smartphones and tablets are a huge market and probably the most natural evolution of the desktop.

However, porting Ekiga to Android would require some work. But I’m thinking about it. The first step would be to simplify the software and make it light and streamlined, which is the plan as part of Ekiga 4.00.

I think having a portable version of Ekiga would help a lot of people. Now that you make me think about it, I wonder if it is not even more important than the Windows port. And probably easier.

For a few years, we have rebuilt Ekiga around the Ekiga engine, which is a portable way of doing things. The frontend is clearly separated from the user interface. I suppose that porting Ekiga to Android would simply mean writing a new frontend as well as new audio and video plugins. Anyone want to help?

How often is Ekiga updated and who is in charge of the changes?

We have a team of volunteers working on the software. Robert Jongbloed and Craig Southeren are doing a lot of work on the SIP support through the OPAL library. Julien Puydt is working a lot on the code, especially in the core engine part of Ekiga. Eugen Dedu has been coordinating the releases, bug fixes, patches for a couple of years, and Yannick Defais has been working on a new Ekiga.net platform and the documentation. All volunteers contributions are welcome, invaluable, and too numerous to mention.

We release the software when it is ready. However, some more fresh blood to help programming on the project is always welcome to accelerate the development.

What are Ekiga’s challenges after 10 years?

The biggest challenge for me is to keep working on it. A few years ago, I had more spare time. But spending time with my friends and family is often incompatible with Ekiga development. I do not think I will be able to continue coding on Ekiga as much as I did in the past.

The biggest challenge for me is to keep working on it. A few years ago, I had more spare time. But spending time with my friends and family is often incompatible with Ekiga development. I do not think I will be able to continue coding on Ekiga as much as I did in the past.

The biggest challenge is thus to keep the project moving and up to date while I personally can spend less time on it than I have done. There are lots of opportunities for others to join and help significantly.

What is the future of Ekiga?

I still need to discuss this with the team, but I think one of the big plans may be to make Ekiga more focussed. We should concentrate our efforts on the engine, and SIP support. If you look at the Android world for example, it makes no sense to provide an alternative to the address book, to the dialer and to such tools. We should use what is already available on those platforms and integrate with it to provide what we are the best at : audio and video through SIP.

I think we should do the same for GNOME : integrate with GNOME’s address book instead of providing ours with our own view of the GNOME address book ; integrate with GNOME’s account setup software instead of having our own accounts window, and so on…

A few years ago, it was still logical to integrate with the desktop and to provide its own set of tools. However, things have changed. We see less and less people using anything else than a well-integrated desktop providing all soft of tools to manage contacts, manage e-mails, calendars, … The same applies to tablets. We should create plugins to those tools and not have our own views and alternatives to them.

That is my main idea.

I believe you wrote that Ekiga was free as in speech and free as in beer – so who pays the bills?

I have a main job which pays the bills. That is why finding spare time for Ekiga is so difficult because I am devoting all of my time (including the spare time) to my paid job. I am managing a startup named Be IP developing IP Telephony software based on Open Source software (mainly Asterisk).

I think one of the biggest problems with Free software is that it is free as free beer. Everybody needs money to feed the family :-)

Thank you for the interview, Damien

Fancy a trip to Rome but cannot justify the expense! Here is the opportunity –

Giorgio Natili and ActionScipt.it have organised Flash Camp Rome on Friday 4th November from 11.00 am to 6.00 pm. The Flash Camp will take place at the Carrot’s Café Piazza Euclide – one of the most trendy bar in Rome – see google map.

Giorgio Natili and ActionScipt.it have organised Flash Camp Rome on Friday 4th November from 11.00 am to 6.00 pm. The Flash Camp will take place at the Carrot’s Café Piazza Euclide – one of the most trendy bar in Rome – see google map.

During the meeting you will have the opportunity to discuss the hottest topics of the moment, have lunch with your friends, drink nice coffee and possibly go home with some goodies.

The sessions planned are:

- Back from MAX with the latest new

- Hands-On on Flash Mobile Development – 2/3 hours, Speaker Mihai Corlan and Ryan Stewart

- Create a game for Blackberry Playbook with Adobe AIR, 1 hour, Speaker Giorgio Natili

so why not go to Flash Camp and spend the weekend in Rome!

Finding the overlaps between Open Data, Public Data, Open Government etc somewhat confusing, I asked Sam Smith organizer of OpenTech, the informal, low cost, one-day conference on slightly different approaches to technology, something else and democracy. His long answer to a short email was very helpful, and it’s below, as it might also be of interest to you too.

Be careful what the words mean!

In the late 80s and early 90s, there were a lot of conferences where telecoms, software and media people came together. They didn’t know what was going to happen but something big was about to happen.

If Twitter had been around then, I suspect someone sitting in all 3 worlds [would] have tweeted something like this. Apologies for the expletive, I guess it missed the spell check.

Now, we find ourselves in a similar place with the “transparency agenda” (government speak) or “open data” (civil society speak), or “interesting data” (probably people like you). As was the case then, one problem is coming from the use of the same words to mean completely different things. Different definitions, and expectations, are leading to a lot of wasted time and effort.

But let’s take a few steps back for a minute –

The UK Government is running 2 (ish) data consultations until the end of the month. The first called Making Open Data Real, and the second around something called the Public Data Corporation.

Both consultations opened on the same date, close on the same date, and have the word “data” in their titles. Given that, you’d think that they will use “data” in the same way, or are run by the same people. Needless to say, they don’t. Well, sort of. For Government types, this is normal; for everyone else, it’s unstated and confusing. The beginnings of the culture clash.

The “Making Open Data Real” is about, well, open data. According to the Cabinet Office, Open Data refers exclusively to data which meets the Open Data Definition. There are a number of questions, but generally a lot of cheering and applause from the activist community. The “Public Data Corporation”, from within BIS, is getting somewhat of a different reaction. It’s about public data, which is sort of like open data, but not the same thing. The response could not be more polarised (if you have an opinion, reply to the two consultations).

The “Making Open Data Real” is about, well, open data. According to the Cabinet Office, Open Data refers exclusively to data which meets the Open Data Definition. There are a number of questions, but generally a lot of cheering and applause from the activist community. The “Public Data Corporation”, from within BIS, is getting somewhat of a different reaction. It’s about public data, which is sort of like open data, but not the same thing. The response could not be more polarised (if you have an opinion, reply to the two consultations).

Now, to give credit where it’s due, the Glossary of Terms at the start of the consultations are (slightly) different, and they came out from different departments. This is the Government (and the civil servants within) trying to separate two distinct issues. But they are coming from such a dramatically different place to those who have been campaigning in this space for years. From the outside, they both talk about data, both came out of Government, and so those distinctions disappear very quickly.

Having now been in a number of open meetings from a number of angles and communities, everyone is talking about the same consultations, using the same words, throwing their hands up at various aspects, and generally doing much wailing and gnashing of teeth. A short response to that is “please read the glossary” – the same words mean different things to different groups in different contexts at the same time. There is so much time, effort and passion being wasted due to fundamental misunderstandings around the consultations.

In brief, it’s very clear, the Government is not going to put postcoded, compulsory, longitudinal data about school children on the web in a raw form. If you don’t understand what that is, that’s ok, because you don’t need to. There are some possible outputs that can be open data from that dataset, but a very great number that should not be. For example, if you’re looking for data on catchment areas for schools, it would look to be a good source of real data (where the school actually takes people from, rather than where it says it does) and also, contains no information about children; that could be completely open data. Other parts must be tightly restricted (it’s about school children after all), but that doesn’t mean those restrictions need to be financial. Fear of people attacking the data can be assuaded by licensing. However, putting any restrictions at all on a dataset means that it does not meet the Open Data Definition. Such a dataset is therefore not open data in the first model, but is within scope for the PDC – it’s not the “open data corporation” for a reason. Open Data advocates got what they asked for; but it may not be what they wanted.

The “ish” part of the 2 consultations is around privacy; and as detail gets fleshed out on the two consultations, a set of questions and possible answers, using existing best practice for release of data, can be opened. As questions need answering – or discussion – on the issue, that’ll come to the fore.

But the focus here is not on the consultations, but on the reactions to it.

At an event last week, an eminent professor gave a 20 minute critique of these open data consultations, which could be summarised as “please don’t take our data distribution funding away”. At an event this week, the head of a campaigning organisation asked: “How can we use our mass-email lobbying tool?”. Not should, not would: can. It’s 1980s campaigning 30 years on, how do we use the tools we have always used now? Open Data is supposed to be better than that.

In some ways, this is the first major Government machinery reform that would not have been possible without the internet, something that requires almost all of Government to change parts of its processes. It is the (comparatively) “easy” output part to the inputs of “Digital By Default”. It has to be a good thing that they don’t think they have it all figured out, and are actively asking for input and feedback. Previously, more copies of anything implied additional cost. With (open) data and the internet, it doesn’t.

The data context is changing so fast, and there are actors who have been there for a long time, who are now being joined by empowered individuals, able to do things which used to take a large organisation. This is familiar to any campaigner in arenas where upstarts have kicked up. In the UK, Mumsnet has effectively replaced prior identifiable groups in that area.

Whether the arguments are right or wrong, there’s a much clearer distinction about the nature of the organisations speaking. It’s not a post-cold-war East vs West discussion, but something much more individual: whether a dramatic shift in environment is looked up with hope or despair, and whether, in the face of challenges you want to include or exclude people.

Whether the arguments are right or wrong, there’s a much clearer distinction about the nature of the organisations speaking. It’s not a post-cold-war East vs West discussion, but something much more individual: whether a dramatic shift in environment is looked up with hope or despair, and whether, in the face of challenges you want to include or exclude people.

How many programming languages are out there? We’re not aware of any precise count but the Wikipedia lists some 630 notable ones. And new ones are appearing by the day. Only few of them will make it to the previous list and fewer still will become mainstream. Does that mean one should not bother to try to keep up with them? Well, what if one knew which ones are going to matter?

How many programming languages are out there? We’re not aware of any precise count but the Wikipedia lists some 630 notable ones. And new ones are appearing by the day. Only few of them will make it to the previous list and fewer still will become mainstream. Does that mean one should not bother to try to keep up with them? Well, what if one knew which ones are going to matter?

Opa is one of the new programming languages that entered the scene in June 2011 with its open source release (though it’s development started long before that). In the recent article covering InfoWorld’s 2011 Best of Open Source Software Awards, Eric Knorr had this to say about it:

“[…] and although it was too late for Bossie consideration, keep your eye on Opa, the new open source language that InfoWorld’s Neil McAllister believes may transform Web development.”

In only 3 months since its public release Opa gained a lot of attention, its homepage attracting some 100,000 unique visitors and its crowd of followers growing steadily. What is it then that makes Opa so interesting?

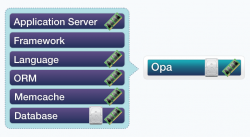

For starters Opa is a new programming language designed specifically for the web. And as such, it really rethinks and rebuilds from the ground-up the way web applications are developed.

Probably the most notable difference with existing approaches is Opa’s integrity. The typical technology stack for web applications involves a web server, a DBMS, a client side language (typically, Javascript), a server side language with an accompanying web framework and a database query language (typically, SQL). Developers need not only to master all those components, but also to configure them and to make them communicate and work together, which often involves writing a lot of (boring and error-prone) boilerplate.

Probably the most notable difference with existing approaches is Opa’s integrity. The typical technology stack for web applications involves a web server, a DBMS, a client side language (typically, Javascript), a server side language with an accompanying web framework and a database query language (typically, SQL). Developers need not only to master all those components, but also to configure them and to make them communicate and work together, which often involves writing a lot of (boring and error-prone) boilerplate.

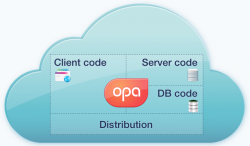

Opa removes all those complexities by offering an integrated platform for web apps. Its programs are automatically distributed between the server and the clients (via automatic translation to JavaScript) and the communication between those agents is handled by the compiler automatically. Also the database is tightly integrated with the language removing the need for any kind of data-mapping.

This has two important consequences. First is simplicity and, as a result, increased productivity for developers, who now only need to master one language and who can focus on the application logic and UX instead of spending their time working on the infrastructure.

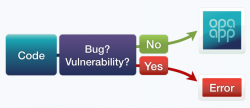

The second outcome is perhaps even more important: increased security of applications. Thanks to tight integration Opa applications are immune to most of the popular threats, including SQL injections and XSS attacks. And because all the client-server communication is taken care of by the compiler it can be made secure once and for all.

Finally, tight integration allows Opa to also bring a huge improvement to runtime and deployment of web applications. The compiler produces a single self-contained executable, which includes all the resources of the applications (images etc.) and allows to move it between machines and execute with a single command.

![]()

The language is also fully “cloud-compatible”, where running more instances of the application on many machines is fully automated and load-balancing and communication between the instances is transparently handled by the platform.

The language is also fully “cloud-compatible”, where running more instances of the application on many machines is fully automated and load-balancing and communication between the instances is transparently handled by the platform.

Finally Opa features a strong, static type-system and employs static analysis techniques, which together are capable of detecting a surprisingly high percentage of programming mistakes, therefore improving code quality and typically saving time spent on debugging the application.

Finally Opa features a strong, static type-system and employs static analysis techniques, which together are capable of detecting a surprisingly high percentage of programming mistakes, therefore improving code quality and typically saving time spent on debugging the application.

For more information about Opa visit its homepage.

If the weather was not too good it was well compensated by the charm of the PHP organisers and delegates or, should I say, by their lovely lunches and coffee breaks. Now in its 5th year, PHP Barcelona Conference has grown yet again with over 400 delegates, people coming from as far as Argentina and Uruguay. What is so special about this conference, you may ask?

If the weather was not too good it was well compensated by the charm of the PHP organisers and delegates or, should I say, by their lovely lunches and coffee breaks. Now in its 5th year, PHP Barcelona Conference has grown yet again with over 400 delegates, people coming from as far as Argentina and Uruguay. What is so special about this conference, you may ask? The beer evening – Can’t talk about that I was selling books

The beer evening – Can’t talk about that I was selling books Xdebug – self explanatory – how to detect and debug PHP scripts with the free open source tool Xdebug

Xdebug – self explanatory – how to detect and debug PHP scripts with the free open source tool Xdebug